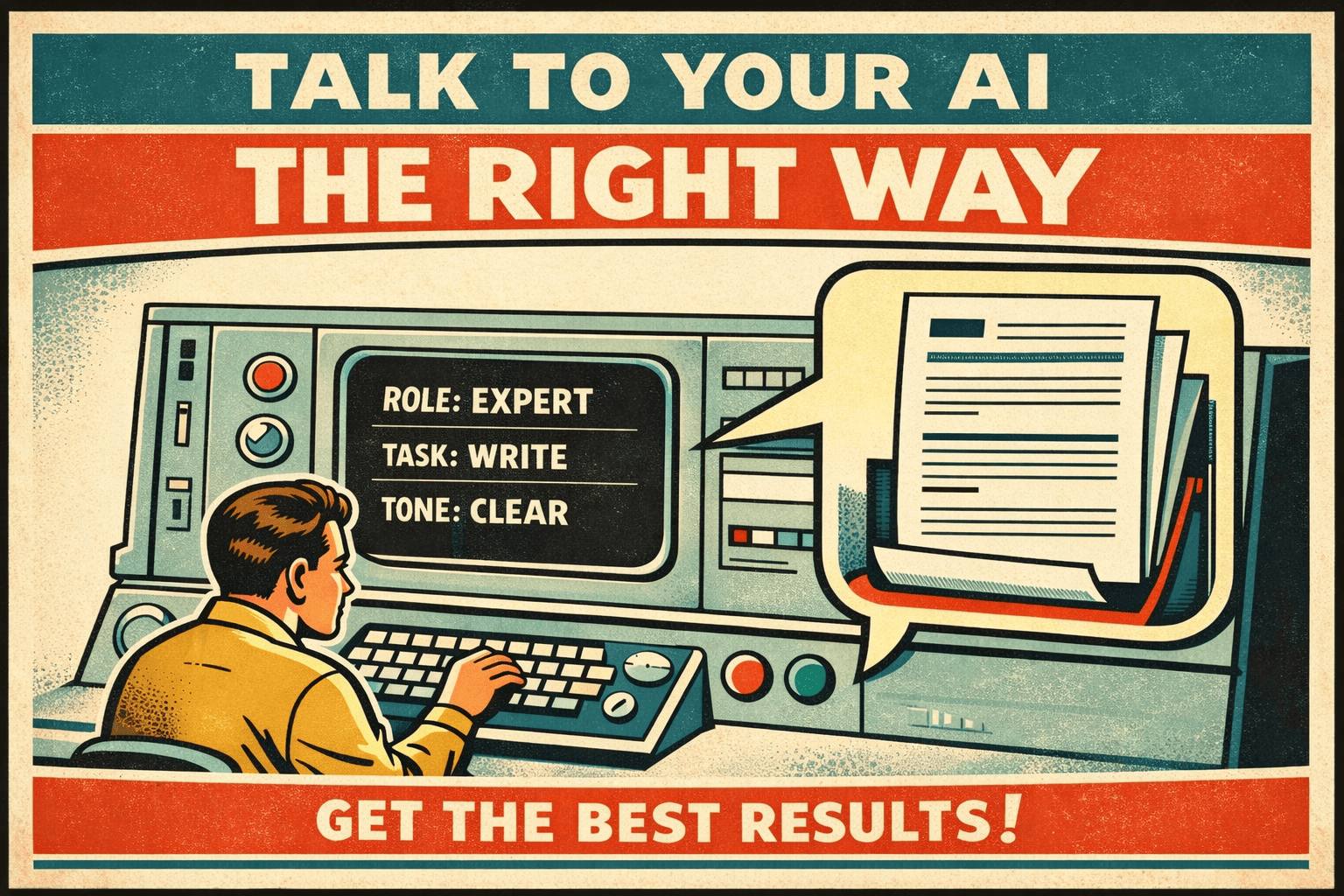

Prompt engineering is how you talk to AI so it actually does what you want.

That's the entire concept. You write instructions for a language model (ChatGPT, Claude, Gemini, whatever you're using), and the quality of those instructions directly determines the quality of what you get back. Vague input, vague output. Specific input, useful output.

The term sounds technical. It's not. If you've ever reworded a question to ChatGPT because the first answer was useless, you've done prompt engineering. The only difference between doing it casually and doing it well is knowing which techniques work, why they work, and when to use each one.

This guide covers all of that. Techniques with actual prompts you can copy. Before-and-after examples. The stuff that's changed since 2024 (a lot). And an honest take on where prompt engineering is headed, including the people who say it's already dead.

What Prompt Engineering Actually Means

Prompt engineering is the practice of designing inputs for AI language models to get specific, useful outputs. That's the textbook version. Here's what it means in practice.

Every AI model processes your input through billions of parameters trained on massive datasets. The model doesn't "understand" your question the way a person would. It predicts the most likely useful response based on patterns in its training data. Your prompt is the steering wheel. The model is the engine. Same engine, completely different destinations depending on how you steer.

A 2020 paper by Brown et al. at OpenAI ("Language Models are Few-Shot Learners") demonstrated that GPT-3's performance on tasks varied dramatically based on how prompts were structured. The same model that failed basic math with a bare question could solve complex reasoning problems when given a few examples first. Nothing changed in the model. Everything changed in the prompt.

That's why prompt engineering matters. You're not changing the AI. You're changing what you ask for and how you ask for it.

Why Most Prompts Fail

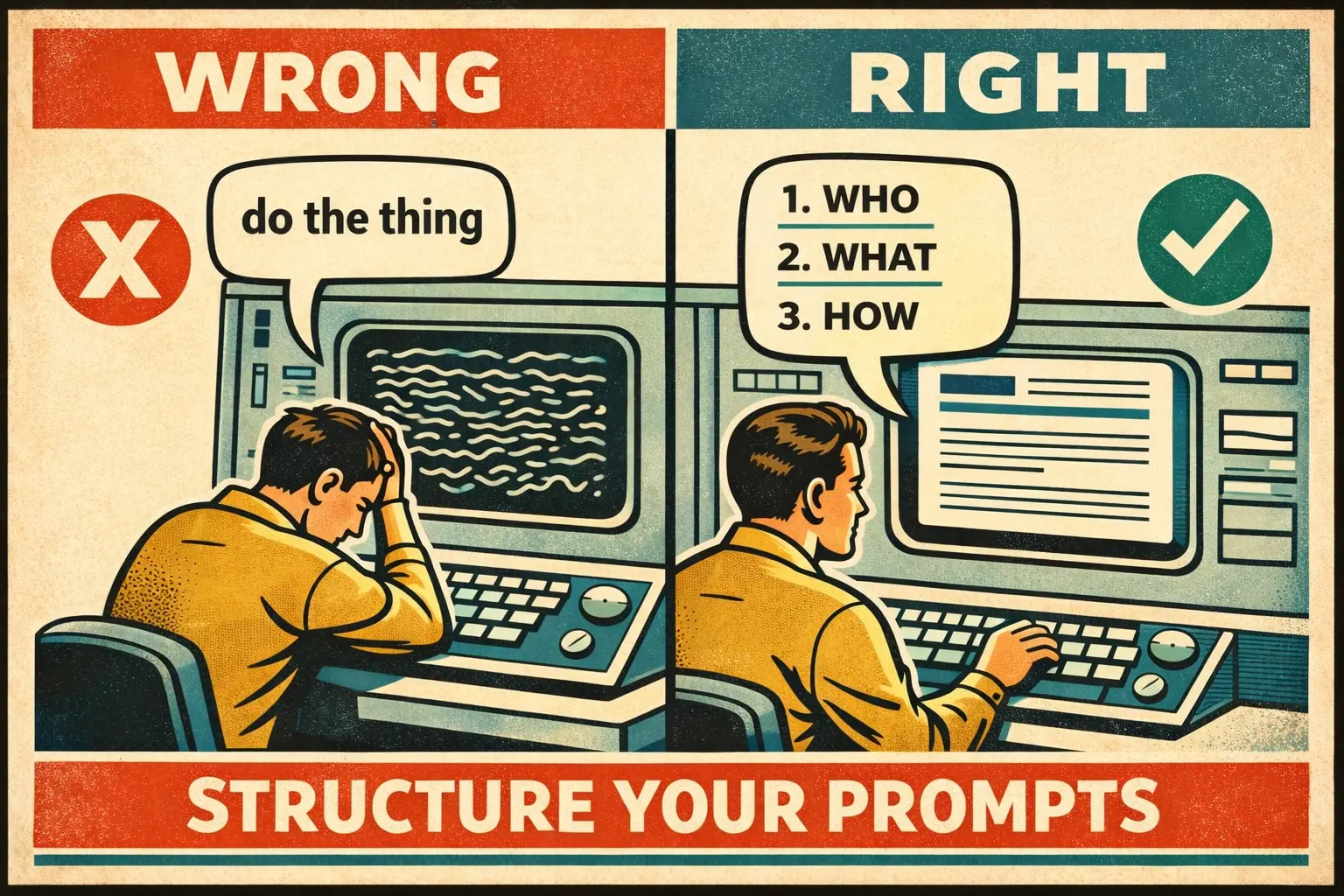

Before getting into techniques, it's worth understanding why the default way most people prompt AI doesn't work well.

The vague request. "Write me a marketing email" gives the model nothing to work with. It doesn't know your audience, your product, your tone, your goal, or your constraints. So it produces something generic that sounds like every other AI-generated marketing email.

The kitchen sink. "Write a 500-word blog post about sustainable fashion that's SEO-optimized for the keyword 'eco-friendly clothing' and includes statistics and is written in a casual tone but also professional and mentions three brands and has a call to action and..." Long, tangled prompts confuse models the same way they'd confuse a person. The model tries to satisfy everything and nails nothing.

The missing context. "Summarize this article" without telling the model who the summary is for, how long it should be, or what angle matters. A summary for a CEO looks different from a summary for a social media manager.

Good prompt engineering fixes all three. It's specific without being overwhelming, structured without being rigid, and always includes enough context for the model to make good decisions.

Prompt Engineering Techniques That Actually Work

There are dozens of named techniques in the academic literature. Most people need about six. Here are the ones that produce real results, with actual prompts you can test right now.

Zero-Shot Prompting

You give the model a task with no examples. Just instructions.

Prompt:

Classify the following customer review as positive, negative, or neutral. Only output the classification, nothing else.

Review: "The shoes arrived on time but the left one had a scuff mark on the toe. Customer service replaced them within a week."

Output: Positive

Zero-shot works for straightforward tasks where the model's training data already covers the pattern. Classification, translation, summarization, simple Q&A. If the task is common enough that the model has seen thousands of similar examples during training, you don't need to provide your own.

Few-Shot Prompting

You give the model a few examples of what you want before asking it to perform the task.

Prompt:

Convert the following product descriptions into one-line taglines.

Description: "A lightweight running shoe with responsive cushioning and breathable mesh upper designed for daily training." Tagline: "Run daily. Feel nothing but fast."

Description: "A noise-canceling wireless headphone with 40-hour battery life and memory foam ear cups." Tagline: "Silence everything except the music."

Description: "A cast iron skillet pre-seasoned with flaxseed oil, oven-safe to 500°F, with a helper handle for easy lifting." Tagline:

Output: "Cook like your grandmother knew something you didn't."

The Brown et al. (2020) paper showed that few-shot prompting improved GPT-3's performance on SuperGLUE benchmarks by over 20 percentage points compared to zero-shot. The examples don't teach the model new information. They calibrate its output style, format, and reasoning pattern.

Chain-of-Thought Prompting

You ask the model to show its reasoning step by step before giving an answer. This is the single most impactful technique for complex tasks.

Prompt:

A store sells notebooks for $4 each. They're running a promotion: buy 3, get the 4th free. If someone buys 11 notebooks, how much do they pay? Think through this step by step.

Output:

Step 1: For every 4 notebooks, the customer pays for 3. So I need to figure out how many groups of 4 fit into 11. Step 2: 11 ÷ 4 = 2 groups with 3 remaining. Step 3: Each group costs 3 × $4 = $12. Two groups = $24. Step 4: The remaining 3 notebooks are paid at full price: 3 × $4 = $12. Step 5: Total = $24 + $12 = $36.

Google researchers (Wei et al., 2022) published "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models," showing that this technique improved accuracy on math word problems by 40-70% compared to standard prompting. The model doesn't just guess the answer. It works through the logic, catches its own errors, and arrives at better conclusions.

Role Prompting

You tell the model who it is before giving it the task. This sets the tone, vocabulary, depth, and perspective of the response.

Prompt:

You are a senior data engineer with 15 years of experience in ETL pipelines. A junior developer asks you: "Should I use pandas or PySpark for processing 50GB of CSV files daily?" Explain your recommendation.

The response will be completely different from asking the same question without the role. With the role, you get technical depth, trade-off analysis, and real-world considerations. Without it, you get a generic comparison that reads like a blog post from 2019.

Role prompting works because it activates specific patterns in the model's training data. When you say "senior data engineer," the model draws on content written by and for that audience. Different role, different vocabulary, different assumptions about what the reader knows.

System Prompting

System prompts are instructions that sit above the conversation. They define persistent behavior rules that apply to every response the model generates in that session.

System prompt:

You are a customer service agent for a SaaS company. You are helpful, concise, and professional. Never reveal internal pricing logic. If the customer asks about enterprise pricing, direct them to sales@company.com. Always confirm the customer's issue before suggesting a solution. Keep responses under 150 words.

System prompts are the foundation of every AI chatbot, assistant, and agent in production today. They're not a conversation technique. They're architecture. Every tool in our prompt library uses a system prompt to define its behavior, constraints, and output format.

Prompt Chaining

Instead of one massive prompt, you break a complex task into a sequence of smaller prompts where each one's output feeds into the next.

Step 1: "List 5 key themes from this customer feedback dataset."

Step 2: "For each theme, identify 2-3 representative customer quotes."

Step 3: "Write a one-page executive summary organized by theme, using the quotes as evidence."

Chaining works better than a single prompt for the same reason you don't write an entire project plan in one sentence. Each step is focused, verifiable, and easy to debug if something goes wrong.

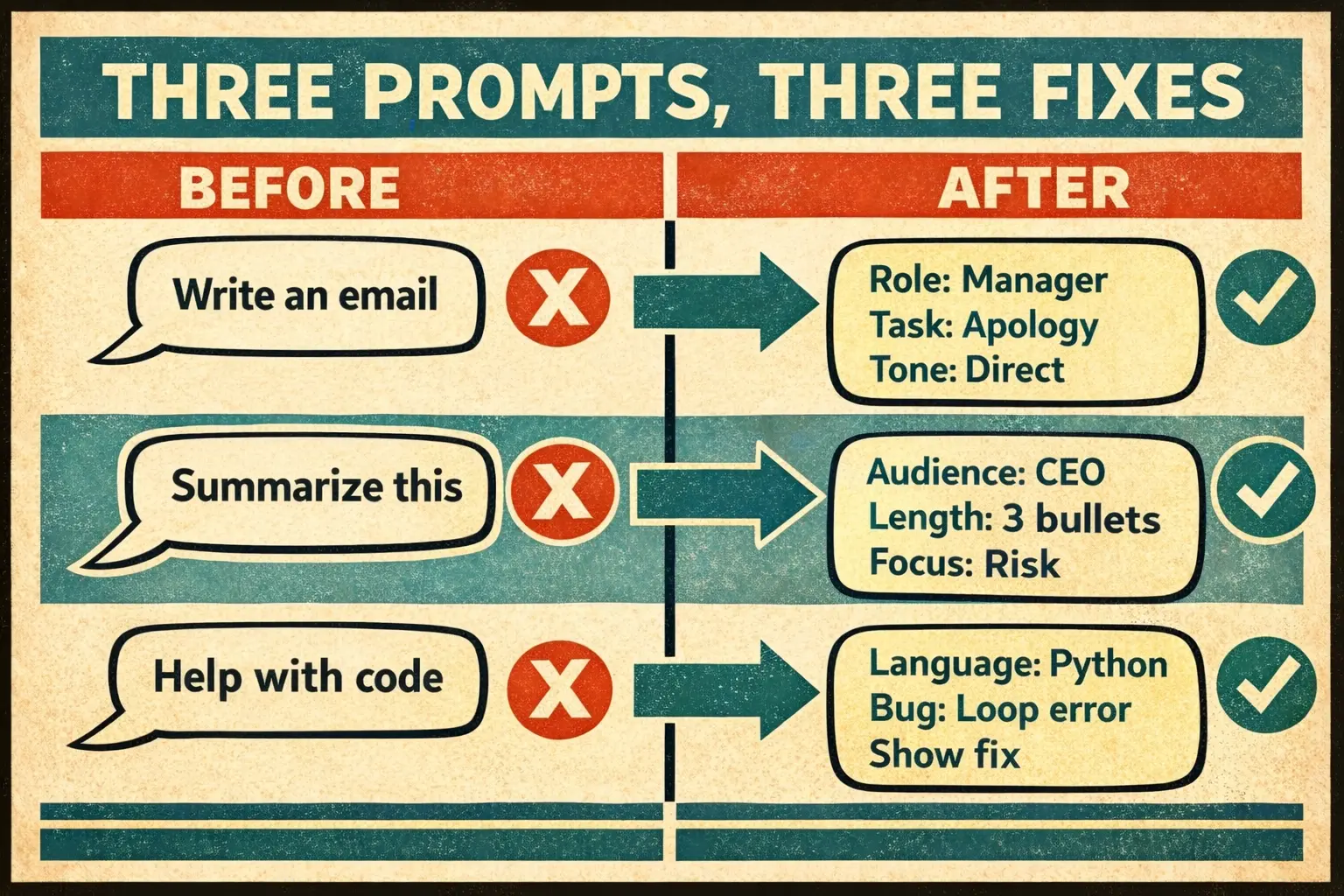

Prompt Engineering Examples: Before and After

Theory is useful. Seeing it work is better. Here are three real scenarios showing the difference between a casual prompt and an engineered one.

Writing a Cold Email

Before:

Write a cold email for my SaaS product.

After:

Write a cold outreach email for a project management SaaS targeting marketing agency owners with 10-50 employees. The email should be under 120 words, open with a specific pain point (managing multiple client timelines), mention one concrete result (a customer reduced missed deadlines by 60%), and end with a soft CTA asking for a 15-minute call. Tone: direct, not salesy. No exclamation marks.

The first prompt produces generic filler. The second produces something you'd actually send. The difference isn't talent. It's specificity.

Analyzing Data

Before:

Analyze this sales data.

After:

I'm pasting Q4 2025 sales data for our three product lines (A, B, C) across four regions (NA, EU, APAC, LATAM). Identify: (1) which product-region combination grew fastest quarter-over-quarter, (2) which combination declined, (3) any seasonal patterns compared to Q4 2024 if you can infer them. Present findings as bullet points, then a one-paragraph summary a VP of Sales could forward to their team.

The first gets you a vague paragraph about trends. The second gets you an actual analysis with the right audience, structure, and actionable findings.

Generating Code

Before:

Write a Python function to clean data.

After:

Write a Python function called

clean_survey_responsesthat takes a pandas DataFrame with columns:respondent_id(int),age(int, may have nulls and negatives),satisfaction_score(float, 1-5 scale, may have values outside range),open_response(str, may be empty). The function should: remove rows where age is null or negative, clip satisfaction_score to 1-5 range, replace empty open_response with "No response provided", and return the cleaned DataFrame. Include type hints and a docstring.

Same AI model. Completely different output. That's prompt engineering.

Prompt Engineering Best Practices

Six rules that apply across every model and every use case.

1. Start with the output format. Tell the model what shape the answer should take before you describe the task. "Return a JSON object with keys: summary, sentiment, action_items" is clearer than describing the task and hoping the format works out.

2. Give the model a role when the task needs expertise. "You are a tax accountant" produces different advice than "You are a financial advisor." Pick the role that matches the knowledge you need.

3. Include constraints. Word counts, forbidden topics, required sections, output language, audience level. Constraints aren't limitations. They're focus.

4. Show, don't describe. If you want a specific writing style, paste an example of that style. A 50-word example communicates more than 200 words of description.

5. Iterate in public. When the first output isn't right, don't start over. Tell the model what was wrong: "Good structure, but the tone is too formal. Rewrite with a conversational voice. Keep the same points." Refinement prompts are half the skill.

6. Test across models. A prompt that works perfectly in ChatGPT might produce different results in Claude or Gemini. Each model has different strengths. The prompt library is a good place to test how the same prompt pattern performs across different tasks.

Prompt Engineering in Practice: Where People Actually Use This

The techniques above aren't academic exercises. They're running in production at companies you've used this week.

Customer service chatbots. Every AI chatbot uses system prompts and role prompting to stay on-brand and within scope. The best ones use prompt chaining to first classify the customer's issue, then retrieve relevant help articles, then generate a response.

Code generation. GitHub Copilot, Cursor, and similar tools rely on context-rich prompts built from your current file, recent edits, and project structure. The better the context, the better the suggestions.

Content creation. Marketing teams use few-shot prompting to maintain brand voice across hundreds of pieces of content. You show the model three examples of your style, and it calibrates every future output to match.

Data analysis. Analysts use chain-of-thought prompting to walk models through multi-step calculations, catching errors that single-shot prompts would miss.

Research synthesis. Prompt chaining turns a stack of papers into structured literature reviews. One prompt extracts key findings from each paper. Another identifies contradictions. A third synthesizes the whole thing into an executive brief.

Is Prompt Engineering Dead? The Context Engineering Shift

You'll hear this question a lot in 2026. Gartner started using the term "context engineering" in late 2025 to describe the evolution beyond basic prompt writing. Some people interpreted that as "prompt engineering is dead." It isn't. But it's changing.

Here's what actually happened. Early prompt engineering (2022-2023) was mostly about figuring out magic words and clever phrasings to trick models into better outputs. "Let's think step by step" was treated like a cheat code. And for a while, it kind of was.

But models got smarter. GPT-4, Claude 3, Gemini Ultra. These models don't need tricks. They need context. The shift from prompt engineering to context engineering is really a shift from "how do I phrase this cleverly?" to "what information does the model need to do this well?"

Context engineering includes:

- Retrieval-Augmented Generation (RAG): Pulling relevant documents into the prompt window so the model has real data to work with, not just its training knowledge.

- Tool use and function calling: Letting the model call APIs, search databases, and take actions instead of just generating text.

- Memory systems: Giving models access to previous conversations and learned user preferences across sessions.

- Structured system prompts: Multi-section instructions that define roles, constraints, output formats, and fallback behaviors.

Prompt engineering didn't die. It grew up. The fundamentals (specificity, structure, examples, chain-of-thought) still matter. They're just part of a bigger system now.

A 2024 survey by Stack Overflow found that 76% of developers used AI coding tools, and the most common complaint was "getting the AI to understand what I actually want." That's a prompt engineering problem. It's not going away because we gave it a fancier name.

Prompt Engineering Careers in 2026

Let's talk money and market reality.

Salary ranges. Based on Glassdoor and LinkedIn data, prompt engineering roles in the US pay between $90,000 and $175,000 annually. The keyword "prompt engineering salary" gets 1,900 searches per month, so people are asking. Senior roles at AI companies and large enterprises sit at the top of that range. Entry-level and freelance roles start around $60,000-80,000.

Job titles are evolving. Pure "prompt engineer" job postings peaked in mid-2024 and have been declining. But the skills haven't disappeared. They've been absorbed into other roles. AI engineers, ML engineers, product managers, and even content strategists now list prompt engineering as a core skill. The work didn't go away. The dedicated title is becoming rarer because everyone's expected to do it.

Skills that matter:

- Writing clearly (this is 80% of the job)

- Understanding how language models work (not at PhD level, but beyond "it's magic")

- Domain expertise in your field (a lawyer who can prompt well beats a prompt specialist who doesn't know law)

- Basic Python (for API access, testing, and automation)

- Comfort with ambiguity (models surprise you constantly)

Honest take. If you're considering "prompt engineer" as a standalone career, hedge your bets. Learn the fundamentals here, but also learn to build with AI APIs, understand RAG architectures, and pick up a domain specialty. The people making the most money with prompt engineering skills are the ones who combine them with something else.

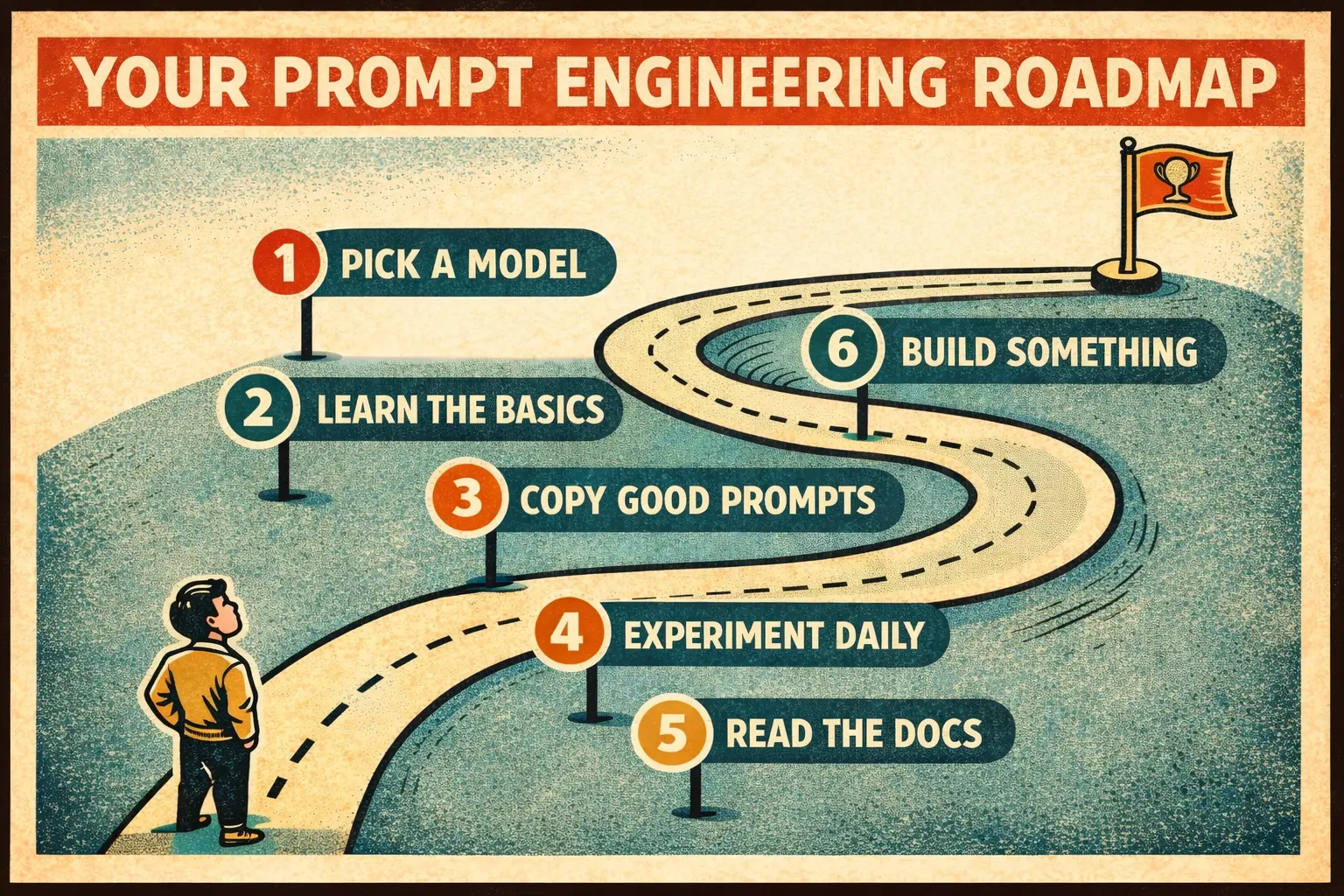

How to Start Prompt Engineering Today

Skip the $500 course. You don't need one.

Step 1: Pick one model and learn it deeply. ChatGPT, Claude, or Gemini. It doesn't matter which. Open a free account and start using it daily for real tasks. Not toy examples. Actual work you need to get done.

Step 2: Practice the core loop. Write a prompt. Read the output. Figure out what went wrong. Rewrite the prompt. Repeat. That loop is the entire skill. You can read about chain-of-thought prompting all day, but you won't internalize it until you see the difference in your own results.

Step 3: Study good prompts. Browse the prompt library and look at how professional prompts are structured. Notice the role definitions, the constraints, the output formatting. Reverse-engineer what makes them work.

Step 4: Try different techniques on the same task. Take one task you do regularly. Write a zero-shot version, a few-shot version, and a chain-of-thought version. Compare the outputs. You'll develop an intuition for which technique fits which situation.

Step 5: Read the model documentation. OpenAI's prompt engineering guide, Anthropic's prompt design documentation, and Google's Gemini prompting guide are all free. They're written by the teams who built the models, and they contain specific advice that generic courses skip.

Step 6: Build something. The fastest path from "I know prompt engineering" to "I can prove it" is building an AI feature, tool, or workflow that solves a real problem. Use an API. Chain some prompts together. Make it work. That project teaches more than any certification.

Frequently Asked Questions

What exactly does a prompt engineer do? A prompt engineer designs, tests, and refines the instructions that AI models receive. In practice, this means writing system prompts for chatbots, creating prompt templates for content teams, building few-shot examples for classification tasks, and iterating on prompts until they consistently produce the right output. It's part writing, part debugging, part understanding how models think.

Is prompt engineering difficult? The basics are simple. Writing a clear, specific prompt doesn't require any technical background. The difficulty increases with the complexity of the task. Getting a model to reliably extract structured data from messy inputs, or to reason through multi-step problems without hallucinating, takes practice and technical understanding.

What are the three main types of prompt engineering? The most common framework groups techniques into three categories: zero-shot (no examples), few-shot (with examples), and chain-of-thought (step-by-step reasoning). In practice, most effective prompts combine elements from all three, and there are many additional techniques (role prompting, prompt chaining, self-refine) that don't fit neatly into this framework.

What's the difference between prompt engineering and context engineering? Prompt engineering focuses on crafting the instructions you give to an AI model. Context engineering is a broader concept that includes everything the model needs to perform well: the prompt itself, plus retrieved documents (RAG), tool access, memory from previous interactions, and system-level configuration. Prompt engineering is a component of context engineering, not a replacement for it.

Are prompt engineers still in demand? The dedicated "prompt engineer" job title is becoming less common. The underlying skills are in higher demand than ever. Companies expect AI engineers, product managers, content teams, and developers to understand prompt engineering as a core competency. Glassdoor data shows prompt engineering skills appearing in 3x more job descriptions in 2026 compared to 2024.